2025 Year in Review

A lightning-round collection of loose threads from 2025

About a year and a half ago, I was reading box scores one day and saw a game with a ton of hits, but very few runs and was surprised at this. Then I paused to wonder - should I be surprised? How should I expect these to scale? I’ve been watching baseball for a long time, but I wondered if my a priori expectations here were miscalibrated.

That night I quickly put together an ad hoc analysis which I posted here, but never quite felt proud about; the methodology wasn’t great. Rather than take it down, I’ve worked to improve it (the original post appears in it’s entirety at the bottom of the page). The ultimate question I wanted to answer is “conditional on the number of hits, what should I expect for number of runs?” To do so, I’ve developed a model with the primary goal of creating a better baseline for my personal expectations.

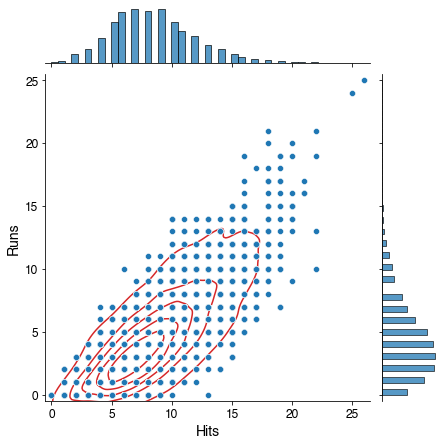

Just looking at the raw distribution of this parameter space for 2018 data:

There’s a lot to note here. First, most of these points represent many, many games, and this distribution is overwhelmed by the data in the lower corner of the distribution. This is emphasized both by the density contours shown in red and the histograms on each axis. However another key feature here is that width of distribution spreads out as the number of hits increases. This is a quality known as heteroscedasticity, a word which I will never be able to spell right on the first try. It’s important to note that one of the assumptions for traditional linear regression is that it’s homoscedastic, so this assumption is violated in this case.

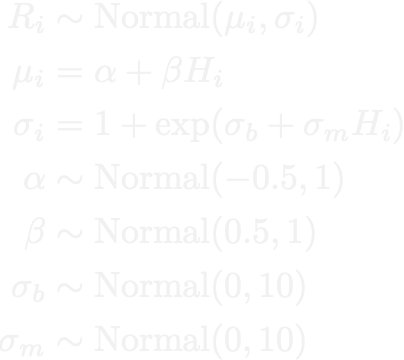

After considering several different ways to model this, I ultimately used a Bayesian linear regression model (using PyMC3), with an extra trick to manage the heteroscedasticity. While standard linear regression assumes constant variance, instead I used a linear model for both the mean and variance of the outcome variable. That is to say, we assume that the number of runs is normally distributed, in which both the mean and variance of that normal distribution scale linearly with respect to the number of hits. Expressly, the model is:

Here you can see two linear models for each of the parameters of the normal distribution. I’ve applied a trick to \(\sigma_i\), the standard deviation, transforming the linear model with an exponential function in order to ensure that the value is positive. In terms of a directed acyclic graph, this looks like:

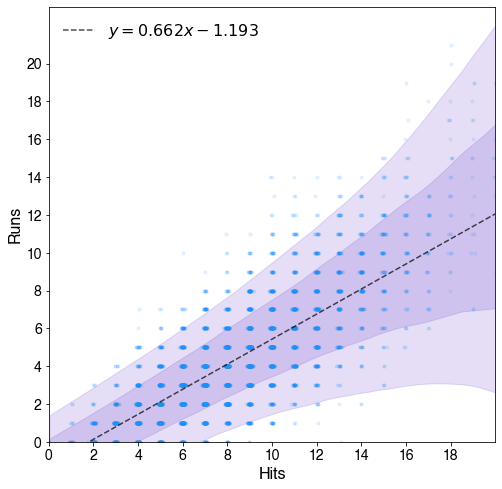

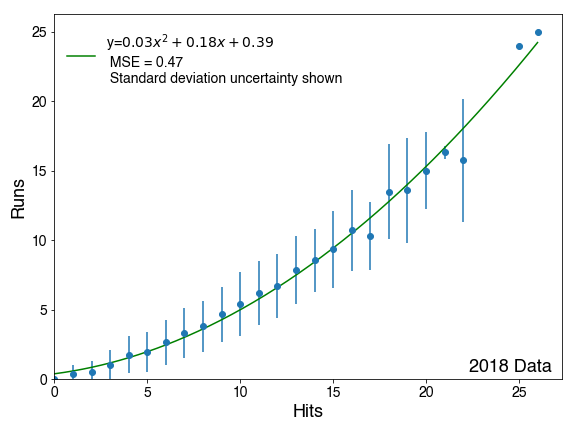

This model is then conditioned on the data from 2018, and yields the following distribution, with the 1 and 2 standard deviation bands shown.

This model has good coverage of the data, and takes into account heteroscedasticity pretty well. Several other models were considered - a standard normally distributed linear regression, a more robust regression using a Student’s T-distribution, and this one proved to be the best (in terms of information criteria metrics).

The takeaway here is that while we expect the number of runs to scale with the number of hits, the range we expect should be very broad, even broader than my default expectations. With 5 hits, for example, we can be 68% confident that there will be between 1 and 3 runs, but our 95% credible interval is anywhere from 0 to 5 runs, so neither of these outcomes should surprise us. It’s not until 8 hits that 0 runs falls out of the 95% credible interval - considering more than 1/8 hits in 2018 were home runs (13.6%), one of those probably automatically converts to a run, so this isn’t entirely surprising.

I think the points of reference I will keep in mind from this in future baseball watching is that at 5 hits, 0 runs falls out of the 68% credible interval, and at 6 hits 1 run falls out. Teams that don’t meet those benchmarks are in the lower 34% of run conversion with respect to hits. On the flip side, teams producing more runs than hits are all outside the 1 sigma band. Again I can use 5 hits as a reference here - at 5 hits, 4 runs puts a team in the upper 34%, so I can keep in mind \((N-1)\) runs for \(N\) hits as a well-above average result. At 9 hits, this becomes \((N-2)\).

The code, along with additional plots, model comparisons, and more is available on my GitHub in this Jupyter notebook.

Recently after seeing a box score with many hits but no runs, I’ve been thinking a bit about run expectancy based on the number of hits in a game. Obviously these are going to be correlated, but I was curious, how strongly, and how well you could predict runs given number of hits.

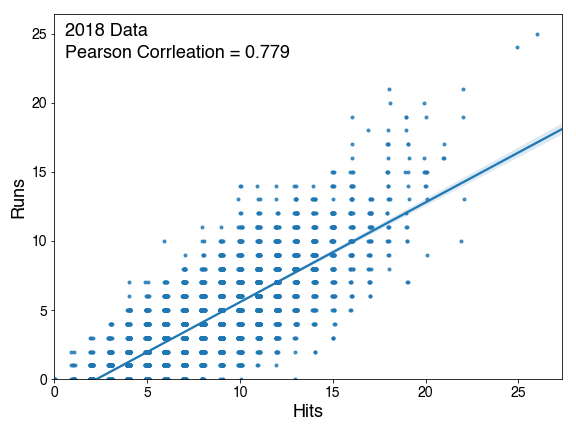

I went and looked at 2018 data for runs scored based on number of hits and did a linear regression:

As expected, the Pearson Correlation is 0.779, which falls under “strongly correlated.” In an attempt to try to get to some prediction method, I calculated the mean at every hit value, and plotted those values, then tried to fit that data.

Looking at just the means, a linear fit does not match the data well, so a polynomial fit of order 2 was used, which does. The coefficient on the x2 term appears small, but once you get to 6 hits, it raises the expectation by an additional run. Once you add standard deviation uncertainty lines to this plot though, you could see that within the 68% confidence interval of 1 sigma, a linear fit could probably work just as well to this data.

A lightning-round collection of loose threads from 2025

Books I read in 2023

Who will win this year’s cup?

Books I read in 2022

Just how lucky have the 18-3 Bruins gotten?

Interoperability is the name of the game

Books I read in 2021

I got a job!

Books I read in 2020

Revisiting some old work, and handling some heteroscadasticity

Using a Bayesian GLM in order to see if a lack of fans translates to a lack of home-field advantage

An analytical solution plus some plots in R (yes, you read that right, R)

okay… I made a small mistake

Creating a practical application for the hit classifier (along with some reflections on the model development)

Diving into resampling to sort out a very imbalanced class problem

Or, ‘how I learned the word pneumonoultramicroscopicsilicovolcanoconiosis’

Amping up the hit outcome model with feature engineering and hyperparameter optimization

Can we classify the outcome of a baseball hit based on the hit kinematics?

Updates on my PhD dissertation progress and defense

My bread baking adventures and favorite recipes

A summary of my experience applying to work in MLB Front Offices over the 2019-2020 offseason

Books I read in 2019

Busting out the trusty random number generator

Perhaps we’re being a bit hyperbolic

Revisiting more fake-baseball for 538

A deep-dive into Lance Lynn’s recent dominance

Fresh-off-the-press Higgs results!

How do theoretical players stack up against Joe Dimaggio?

I went to Pittsburgh to talk Higgs

If baseball isn’t random enough, let’s make it into a dice game

Random one-off visualizations from 2019

Books I read in 2018

Or: how to summarize a PhD’s worth of work in 8 minutes

Double the Higgs, double the fun!

A data-driven summary of the 2018 Reddit /r/Baseball Trade Deadline Game

A 2017 player analysis of Tommy Pham